If you ask a question via your video, Google has several answers for you and that’s a first!

Every other competition struggles to get enough search engine market share, and with a new video understanding in Lens feature aiding easier motion-based research, Google is looking like it won’t give any more space for rivals to catch up wherever in the world a question is being asked. That includes Nigeria where up to 98.88 percent of users prefer its engine even though rivals try very hard to lull them in.

We previewed our video understanding capabilities at I/O, and now you can use Lens to search by taking a video, and asking questions about the moving objects that you see, Head of Google Search and Vice President of Engineering Elizabeth Reid introduces.

ALSO READ: The 7-step ritual that lets your smartphone outlast a year of weak spending

It could be any scenario where moving images matter to get answers, a user can just launch open Lens via their Android and iPhone’s Google app technology. They are to hold down the shutter button which initiates recording and then are to say your question out loud, like, “why are they swimming together?”.

After these steps have been completed, processing the queried clip will be the next. According to VP Reid, the result will be an AI Overview that delivers helpful web-sourced information alongside.

This protocol is manifested via Lens’ product page. It showed a school of fish circling, the follow-up command revealed on the mobile screen and Artificial Intelligence does the rest of the work.

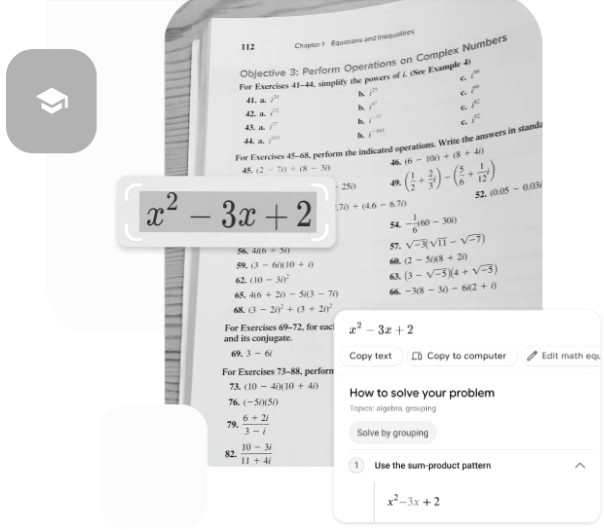

An iteration was also extended to ordinary still images. The researcher making the query can also strengthen the possibility of an exhaustive result by applying vocal specificity.

Nigerian shoppers particularly get the benefits of a product that offers this combined list of features, not excluding being able to discover or remember music through Circle to Search build. This helps with the ease of searching for songs without needing to travel out of the app because a one-stop shop is just what Lens users need.

A long press from the home button or navigation activates the Circle to Search revealing a music button. This helps to identify the track name; the artist singing a song, and it will open a YouTube video to explore even further without moving locations.

The in-app opportunity to get homework makes Google more relatable to young learners pushing up to the next level in a fast-paced world. More and more, it looks like the company is listening to everyone, even where the voices never used to exist.

Experimenting with this is as easy as taking a picture – could be texts from a publishing application where typing had been going on – and Circle to Search translates the write-up to the preferred language. Such as from English to Yoruba or Igbo to Hausa, just by holding the camera long enough to hover over the screen.

ALSO READ: Being charismatic is to be the 2023 Word of the Year

Very quick interactions with all these elements that Google Search executive Elizabeth Reid confirmed have fully launched since yesterday 3 October 2024. Now users probably see a mobile experience getting smoother by the day.